Atlan Team

Introduction

It’s be a little over a year since we introduced our MalwareGAN in this blogpost here.

While that article focused on evading Endpoint Detection & Response solutions by leveraging the mathematical and logical blind spots of these systems, this blog post will focus on taking some of these concepts deeper into the kill chain and focusing on evading XDR, NDR and the domains that typically Managed Detection and Response and Hunt teams are responsible for.

As part of our work on Turul and MalwareGAN, we are continually working at reducing noise and our on-host footprint, and also to reduce the capabilities of other telemetry analysis engines in areas such as beaconing, post exploitation and enumeration.

In my previous post I outlined some introductions around how Clustering and Classification conceptually work in relation to the events generated by binaries, both malicious and benign. In this post I will discuss how Clustering and Classification can be used to zone in on activity that can be used to identify and contain malicious actions and how we are performing Clustering attacks on these systems too.

In the following diagram you can see the cyber kill chain:

In order for malware and associated infrastructure and malicious activities to be successful from the viewpoint of the attacker, there needs to be evasive thinking and considerations across the entire killchain.

It is no use having flawless EDR evasion if your beaconing activity or internal enumeration is resulting in alerts.

Over the course of this post we will dicuss how a typical alert is raised and what an analyst might do to work through the alert. Then dicuss how Machine Learning is used for Clustering and Classification to programmatically zone in on these events and finally how we use logical attacks against these solutions, to evade them.

Alerts and Analysts

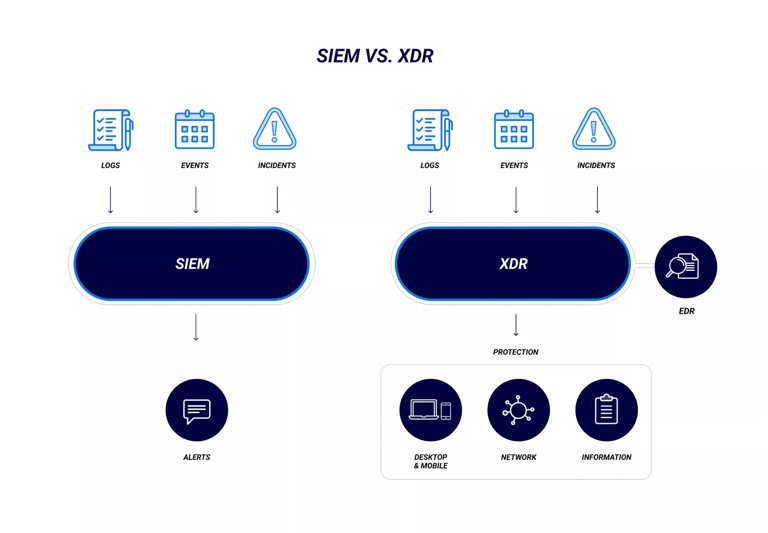

Security Operation Centers (SOCs) use various tools that collect, sort and aggragate events generated by your EDRs, XDRs, and other sources, and then create rules and other configurations to generate incidents for them to investigage. These are events happening on specific machines, in certain parts of networks or similar and allows analysts to zone in on suspected malicious events to investigate. The image below shows some of these tools that are aggregated:

In terms of the incidents themselves, and depending on the telemerty collected these can be graded in terms of severity. For example if you're an analyst and you see events where a process is trying to access LSASS repeatedely, versus perhaps an unusual connection over HTTPS to an domain then clearly a level of severity can be attributed to these events, and then prioritized.

This means that a lot of a SOC analyst’s time is triaging incidents, and either responding to them individually, or manually grouping sets of similar incidents. Specific tools can assist to group these events together, and obviously there are no shortage of vendors that come into offer these kinds of capabilities.

This also means that the telemetry collected is very important, given that if there is no event being generated when LSASS is being accessed, or events are happening in such a way that they do not generate a set of events that correspond to a specific rule within the tooling, then there is no way for the analyst to know, sometimes in a network with over 100,000 endpoints what to actually investigate.

Obviously the investigation is important itself, given that once an analyst where to remotely connect to the endpoint, then your implants and their actions come under much closer inspection.

Clearly an attacker's aim is to NOT generate events, and ideally to populate logs in such a way that when investigated then they cannot be grouped into a set known bad actions.

Machine Learning - Classification and Clustering

If we spend a little bit of time to think about the orgin of malware, then perhaps we can run some mental models around why the tooling is the way it is, and why many tools that rely on these kinds of algorithms maybe be possible to evade, by turning these concepts against themselves.

In a fantastic book i highly recommend: A Pathology of Computer Viruses, by David Ferbrache, it is outlined how initially very low level code was developed to optimise processor workloads. You can image how technically competant these individuals must have been to write "worms" in order to speed up the computation of instructions that these processors were working through. Eventually some of these developers began to write some of these "worms" to perform other activities, some purely for humour, that no longer served the orginal puporse of performance optimisation.

Initially these "worms" and other viruses, were believed to be make belief by the media and that computer viruses could not exist. However they did exist, and as a result a percentage of computer code that was being executed in the collective computing world was now performing undesriable actions.

While Machine Learning is a huge topic today, much of the foundations mathematically and computationally had already been laid down by the time of these malwares, and so if you were one of the first computer responders what would your approach be?

It doesn't take much of guess to write programs that would seperate good code, from bad code. To zone in on the anomous behaviour by classifying, and seperating the known bad from the good. In our first post we discussed how antivirus engines can work in a similar fashion to flowcytomtery, which essentially takes human (or other) cells and based on characteristics seperates them, so that you can Cluster for example cells with attributes that are malignant, away from others deemed healthy.

Thus for a very long time, the premise is that for example there is 99% of code that is good, and 1% of code that is bad, and the aim is to find, and stop the bad code. This same approach was then applied to actions that the code takes.

If I have 100 HTTPS connections, and have all of their characteristics - connection time, domain, intervals etc, then again i can use similar concepts and algorithms to Cluster those connections which I believe, based on some assumptions and rules that i have worked to create, as known bad.

This type of approach can, and has been, applied to a vast array of data that network sensors, EDR, and other telelmetry generating engines deployed inside corporate networks to assist, sometimes automatically, sometimes to aide a manual analysis, zone in on the suspected set or groups of events, of any nature.

This is why for example sophisticated Red Teamers will use malleable C2 profiles - ie model HTTP traffic of benign applications, so that it does not look anomolous.

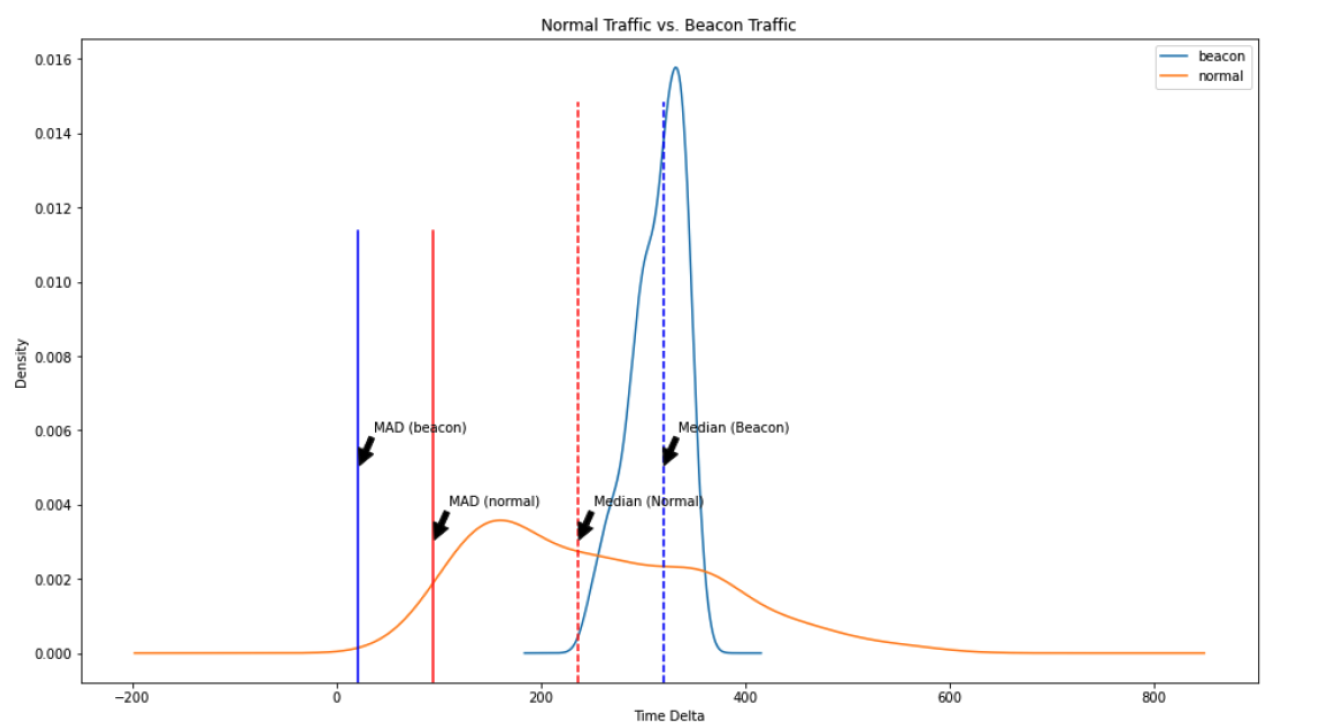

In the example image below you can see how malware beaconing can be Clustered from this thesis. Remember that they are working with a lot of data here, and when a collosal amount of normal beheviour is analysed, against malcious traffic generated by beacons that connect out - potentially with Jitter too - then it is possible to zone in on some as being anomolous. You can learn more in this video and this one, or even learn about Clustering and Classification in our online course here.

Obviously this can applied, and is applied to inter-endpoint traffic, inter-endpoint-fileshare traffic, inter-server-endpoint traffic, inter-process on a host, and so on and so forth, and mostly you find that the range of NDR, XDR, and other solutions are really working at collecting this vast amount of data, then running the data through a set rules to idenfity which patterns of traffic, which inter-endpoint and similar connections, process creations, which EVENTIDs, and similar are unusal.

Turul and Malware GAN research

While we have made significant progress with applying our Clustering attacks against EDRs already, for exmaple below with Turul and MalwareGAN against Sophos EDR:

And early on against Crowdstrike and ATP E5 in parallel:

Our focus is now on applying these same kinds of techniques in post exploitation and in the final section of this short post, we will conceptually discuss the why's and the how's of a few example areas without giving a complete overview:

Beacon

While we have obviously focused on the beacon itself as part of Turul, to evade the EDRs we targeted, I will outline 1/3 of the approach that we take to ensure our evasions.

A typical malicious codebase is small as it largely comprises code for malicious purposes. In contrast, a legitimate program’s codebase is considerably larger and even if it contains segments that resemble malware, it also incorporates a substantial portion dedicated to legitimate functionalities and purposes.

For instance, a backup solution might include a feature resembling ransomware, capable of encrypting numerous files on a computer. However, it goes beyond mere encryption, it creates a designated folder, duplicates the selected files, encrypts them, verifies the encryption, deletes the originals, possibly uploads the files to a server, notifies the user throughout the process, at least provides a command-line interface, etc. While adding more code to malware isn’t always effective, consider this as a simple example. This is particularly true if the malware undergoes reverse engineering and gets flagged manually.

To address this issue Turul transforms programs with MalwareGAN by inserting carefully selected redundant code at OST compilation time. During runtime, the compiled OST executes redundant or junk code in between the original code, thus confusing EDR ML models in one aspect.

Turul’s transformation results in the change of the static make-up of the programme as it appears now to be substantially different from the original if analysed through static antivirus engines.

Every new transformation yields a program different enough from the original to bypass EDRs statically. Trend Micro Locality Sensitive Hashing (“TLSH”) can be used to show the differences between the original and the generated binary.

TLSH distance scores represent the differences between two hashes. If the score surpasses a specific threshold, the hashes are considered different or entirely distinct, and below the threshold, they are considered similar. Typically ranging from 0 to 300, these scores can extend to 1000. Given that executable files often share common components, a degree of similarity is expected. A study by Trend Micro found that TLSH scores of 85 indicate nearly unrelated executables. It’s essential to note that a score below 85 does not necessarily trigger an EDR to flag the binary. It serves as a reference-point rather than an automatic indicator for flagging, offering insight into the interpretation of TLSH distance scores.

We have found for example on our engagements that if you achieve a score >80 TLSH then you can freely use some Red Team tooling against advanced EDRs.

While we have not addressed the other programmatic changes we apply to ensure that dynamically we can evade too, given that we are commercialising the product, there are 2/3rds of other factors that must be considered.

Enumeration

As part of the documentation for Turul, we have outlined how we have de-coupled the post exploitation modules from the beacon process itself and I will share some conceptual ideas as to why you may consider doing is.

Of course we ensure that we have a minimum TLSH score that each PEM (Post Exploitation Module) needs to achieve while being generated, however the concepts below attack some of the Classfication and Clustering approaches in other segments of the network.

The PEM currently consists of:

| Tools | Link |

|---|---|

|

Sharpview |

|

|

Rubeus |

|

|

Sharpsniper |

|

|

Certify |

|

|

SharpDomainSpray |

|

|

Sigwhatever |

If for example I have a single beacon that wants to use SharpDomainSpray to spray the DC to enumerate weaks passwords and land me credentials, then how is this being interpreted from a data generated perspective?

Password spraying against SMB on a Domain Controller results in event ID 4625 "logon failure" being logged on the DC. However if i were to Cluster these then what do i have:

- Single process

- Single IP

- Specific Event ID

- Rapid sucession of logon failures

Again this is not hard to Cluster together as malcious. But what if for example i had a network of PEMs, that worked together to password spray, from different IPs, different processes (potentially mimicking valid processes), at different times.

Now you can begin to see that we are trying to break the corelation of typical activity that is easy to Cluster and apply a set of rules to identify.

The same can be said for any other enumeration activity from Kerberoasting or similar - the aim is NOT to perform these events from single processes at scale, but rather to de-couple and roundrobin from different hosts, different processes, and in our lab environments we are generating telemetry of what NORMAL looks like to model it - remember AD domains need to work inspite of your malware. By iterating through many permuations of PEMs we generate, in live XDR labs, we can fine tune our enumerations to fall within a thresold of activity that the typical Windows domain is already performing in the background.

Another option is to generate FALSE postives, and generate Event ID flows, that mimic real network activity but that in reality does not exist. There will be more around this in a further blog post, alongside some PoC videos.

This merely gives you an idea of the aim and approach in order to break away from the possbility of these tools, mostly relying on Clustering approaches from being able to apply a set of rules to our actions.

Inter-Beacon Connection

In the inter-beacon communication, we can apply similar approached. Typically the beacons will connect to each other via SMB named pipes, and while the inter-beacon communication can also be shifted, we are working towards modelling typical software in an organisation.

For example where a typical beacon to beacon communication might be:

- Two processs

- SMB used a mechanisms to send data

- Similar process names, activity, memory region size

- Time intervals may jitter but may be evaluated

To rather having an implant on the DMZ mimicing an ASPX application, communicating to another implant mimicing a MSSQL process, to the end beacon domain being CSS or JS querying HTTPS traffic, to other beacons communicating with the original beacon as though accessing the application. Here you have SQL traffic, HTTPS traffic, internal HTTP traffic, jobs encoded in JS and CSS and so on and so forth.

Again modelling the typical telemetry generated by industry standard applications can allow us to develop distribuated traffic patterns, that allows us to mimic internal applications and all their components, and traffic patterns to break rule based correlation.

Conclusion

We have outlined some of our progress with Turul and MalwareGAN and stay tuned for more videos to come around how our Post Exploitation endeavors against leading XDR tooling comes along.

R&D

Turul C2 & Turul GAN

Explore our research program, demos, and technical roadmap behind Turul.

Explore R&DTraining

Machine Learning for Red Teams

Hands-on training on ML foundations, clustering, classification, and model abuse for operators.