Atlan Team

Introduction:

With advances in Machine Learning now dominating the news it is impossible to ignore how quickly the field is developing. This is evident when conducting Red Team operations, and you are up against EDRs, and XDRs. In this write up I will be sharing some insights and merely broad conceptual ideas around how and why we are leveraging a General Adversarial Network (GAN) to approach malware implant design.

As as partner at KPMG once said on an internal Red Team meeting call; ‘mindset is critical’! This has since stuck with me as a reminder throughout this project that hypotheses are just as important as directed brute force technical skill and solely human cunning.

Taking an adversarial mindset means that one should look at any problem from above (mindset) and consider what it is we are actually attacking: an EDR is a Machine Learning model. We will be breaking this down below in a broad coneptual way but fundamentally it’s a basic set of logical assumptions and raw data set, which is then refined by statistical mathematics into a vast set of rules for making accurate predictions.

I have seen some fascinating work by others in the industry; for example certain researchers will delve extremely deeply into the world of image load events for dynamic evasion, or other researchers focus on LLVM obfuscation for static evasion, or a wide range of techniques for unhooking ASMI, Event Tracing and similar, I personally believe that the time vs result in energy invested into these endeavours is not always worth the labour invested to achieve your goals. This is exactly what we have been working on at Atlan following the approach below to automate the process with our own model.

Our GAN will focus on targeting Microsoft Advanced Threat Protection (ATP) or Microsoft Defender for Endpoint (I will use the terms interchangeably despite the name change) for two reasons:

- Anyone can get access to ATP for as little as £40–60 pounds per month

- A best-in-class EDR is a worthy target. Microsoft, with its entire E5 technology stack has created in my view one of the most advanced (& constantly evolving) set of ML models around. Its strength is based on the sheer volume of labelled data that they have access to including USERLAND & KERNEL telemetry pulled in from across their enterprise empire.

Technical Approach

The technical approach we are taking for developing our GAN will be broken down into a few sections. Each section will discuss in some limited conceptual detail, the varying approaches used for solving each problem set. This will arm you with the knowledge to follow later blog posts around how we create our machine learning model, associated labs and tools which will delve deeply into each area.

Part 0. Primer on ML clustering & classification

The two primary areas of ML I focused on since 2020 on are K-means and Hierarchical clustering, which by no means makes me a ML expert. ML is a deep and complex field. But I do have some prior experience delving into ML models (K-means: clustering & classification) from co-developing SharpML and doing some logical auditing of a Coronavirus ML modelling algorithm with our Saudi partners (Fiduciam Global Consulting). The lessons learnt on these projects informed how I approached implant design, ever since.

I have been fortunate enough to have worked with my old research manager; Marco who was profoundly excellent in this domain. Naturally I was more focused on an adversarial mindset, wanting to understand how to break ML models.

(NOTE: I recently had an interesting chat with someone from NCC Group who indicated that areas such as blackbox ML testing are niche. Nothing could be further from the truth. In reality, these days and all phishing, maldev, evading network forensics and more is pretty much just blackbox ML testing on a technical level. Simply because of how ubiquitous ML models are in security products. So ML hacking is very much mainstream whether you realise it or not).

To begin, we must first cover what hierarchical clustering and K-means clustering are. It doesn’t matter if you don’t see the relevance to malware development straight away. It was this mindset, and a wide knowledge base that allowed me to develop evasive malware when working at KPMG.

K-means (Unsupervised):

K-means clustering is used in a wide range of fields, including genetics, cancer research (flow cytometry) and is extremely effective at finding correlations between various datapoints.

Let’s say for example that you are looking at millions of events happening inside the Windows operating system. These will consist of processes & threads being created, file operations, network connections being made and much more.

Example of events plotted on a diagram. This is merely an illustration and where the Y axis represents time, the Y axis would likely represent some feature of the event taking place.

In the dummy image above you can see things like file operations, network connections being made, processes & threads being created. Now clearly EDRs are able to take inputs of labelled data, and a multi-million-pound ML model doesn’t produce 2-dimensional output as simple as shown above. But this merely illustrates essentially what is happening conceptually. Data is fed in, run through the model and then correlations being made.

Given that as consultants don’t actually need to understand the math behind it (in fact neither do most ML engineers as they mostly use libraries also) diving deep into the maths behind this process is beyond the scope of this write up.

If we take those random events above, the ML model will try and understand which datapoints are correlated - even events that don't share the same process. So, lets draw a circle around where there is a cluster of events taking place. Again, I would emphasise that this is very watered down, purely conceptual, and I am merely trying to convey the concepts for further discussion around malware development and subsequent posts around our how we are building our GAN.

The shape itself is calculated by using various mathematical formulas, but also based on the assumptions that you are making for that particular model. That circle of events; let’s say it represents a new binary being run (MS Paint.exe) with the axis at the bottom representing time. Process and threads are created, file operations, and network connections are made etc.

A computer doesn’t think like us. We see MS Paint.exe open, the ML models sees that within a process various WinApi calls are being made, network connections, file operations made when you save the image — it doesn’t care or know what MS Paint.exe is, and in the case of an EDR doing malware analysis, the model is looking to find correlated events even in remote processes, threads and files.

It is important to note that k-means clustering is more computationally demanding than hierarchical clustering, but it is more time efficient at making these correlations . This is why K-means clustering is favoured when looking at dynamic events, and why I suspect sometimes it takes a while for ATP to flag certain events as suspicious after the fact. While I could be wrong attacking black box models requires making some assumptions.

That’s a very brief introduction into K-means clustering and how we conceptualise one aspect of the machine learning model we are coming up against. If you want to read more details about k-means be my guest, then you will learn all about centroids, clusters and more. We have released a Machine Learning for Red Teams training where we will deeply dive into these areas, so check it out here.

Hierarchical Clustering (Unsupervised):

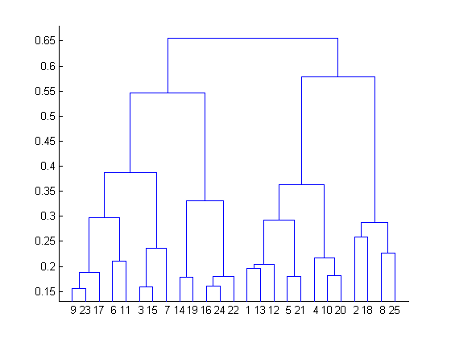

This is what is called a dendodgram. The axis on the left is similarity and each of the little rectangles can be conceptualised as clusters of similar properties.

I personally find it helpful to conceptualise the static analysis element of an EDR model as using hierarchical clustering even if in reality it is more complex than this. In this mental model the EDR builds clusters of properties to understand what constitutes the properties of a single binary and how those properties might match the properties of malware.

If a typical binary has a certain size, certain type of code base, metadata, strings and other properties, then you can begin to create clusters of properties representing what a normal binary looks like. Except the beauty is in malware development, there is no normal binary.

It is much easier to develop clusters of what malicious binaries look like:

- Cobalt Strike shellcode size being roughly 400kb

- An implementation of an AES or XOR algorithm

- Some kind of Syscall (eg NTCreateThreadEx) functions

Reviewing Github, and blog posts that the hacking community (including myself!) frequently borrow from, you can find that there are some extremely similar properties in the code shared across them all (transplanted into Nim, Rust, C++ etc). These consistently similar properties are a piece of cake for Hierarchical clustering to correlate.

ML Conclusion:

Now that we have a conceptual understanding of what type of Machine Learning might be implemented in the engine used by Advanced Threat Protection (this is blackbox testing after all), we can develop a hypothesis as to how we can circumvent and evade them!

A measure of success for this hypothesis, is that this hypothesis & the associated assumptions have paid dividends when developing malware on engagements, and in the testing lab.

By manually testing the incremental progress of ATP and other EDRs including SentinalOne, Carbon Black and Cylance, I have developed a strong sense for which EDR is putting more weight on certain correlations of events or static properties of a binary.

Based on my observations ATP, with its ability to monitor the kernel (alongside all its telemetry to evolve the model) will dominate in the long term. It is interesting to watch leading EDR providers pivot into managed services, and consulting. I don't believe that its merely diversification - some of their core R&D folks seem to have moved onto greener pastures. Especially now with Microsoft's investment into OpenAI (or more like ClosedAI now) we can only expect ATP to develop at an even more rapid pace.

Part 1. Static Analysis

Now let’s first look at what an ML model might look at when using hierarchical clustering to evaluate the properties of a binary.

While there will be many more areas to consider, I am going to focus on three main areas: entropy, strings and file analysis. I found that these areas paid the most dividends for me personally. However the work we are doing with the GAN will shed much more light on this.

Part 1.A Entropy Analysis

Real malware design takes time and effort, and is usually incredibly difficult to do but often attempted under tight time limitations. You are wrestling with the entirety of an ML model that is constantly learning and evolving from hundreds of millions of telemetry events. You can speed this process up by applying some concepts from maths and physics!

Let’s start by discussing entropy and what it is.

Entropy is the foundation upon which all cryptographic functions operate. Entropy, in cyber security, is a measure of the randomness or diversity of a data-generating function. Data with full entropy is completely random and no meaningful patterns can be found.

What does this mean and why is this relevant? Well, when you encrypt some shellcode then the entropy is going to increase.

So XOR encoding increases the entropy somewhat but is easy for an EDR to bruteforce especially as you will need to have the key inside the binary to decode even if you do environmental keying. AES encryption further increases the entropy and so on as the randomness does.

As the ML model will use entropy as a data point, then binaries with high levels of entropy are likely to be clustered with other binaries that have high entropy — encryption is used when you want to hide something!

Once you then take a binary that is not code signed, is roughly the same size, and the size of the encrypted/encoded section being around 400kb (Cobalt Strike shellcode), it’s not very hard even for the untrained eye to cluster these similarities together let alone a frighteningly fast ML model with vast computational resources.

Part 1.B Entropy Evasion

Let’s discuss how we can measure and actually evaluate how much entropy certain sections of your binary has. I am not going to go into the full details, as this has already been done here, where you can find a python script on @oxPat’s blog.

Using that python script, you can run it against your binary and evaluate the level of entropy in the sections of your PE file.

Ideally you want a situation whereby the ML model has full insight into what it is seeing, no obvious key to bruteforce inside the binary, and low entropy.

Lots of teams have moved for this very reason to steganography. ATP (or the ML model) will note an image, and (I’m assuming) may not even be concerned about the levels of entropy of an image file, especially not a png/bitmap image (png uses lossless compression and bitmap is uncompressed)[read more here about image entropy]. Always think back to that image I showed around hierarchical clustering and what associations are being made and then imagine all the binaries with favicons, logos, images and more floating around on Windows machines globally and whether it would make sense to be considering the entropy of images, from a computational perspective (think £).

I am sure the team at Microsoft will begin to work on analysing images for shellcode, which is manually already being done: stego-toolkit. A next stage insight that I will be writing about eventually Next-Generation Environmental keying will solve this problem for the attacker. I will present an implementation in code as well. The original idea of Next-gen environment keying was not mine, but from a senior manager at KPMG.

Part 1.C Strings & Codebase

While it is currently not entirely clear exactly what strings or specific indicators in your code base may trigger ATP responses, bar some obvious and documented posts around using common syscalls or similar function names, our GAN will work to comprehensively identify what is exactly tiggering it.

Keeping in mind the correlative model of what a normal and malicious binary might look like, you can begin to see why so many payloads fail. It’s no use merely fine tuning your injection methodologies, or ensuring that you are encrypting and decrypting shellcode in memory, or whatever low-level abuse you are focusing on. If the model can correlate your activity to an initial vector, be it a DLL or similar with these static properties, that is likely to be clustered with other malware.

Hackers are not developers, but we are required to develop in multiple languages doing complex operations that even seasoned developers find challenging.

However, it is important to bear in mind, that typical binaries are basically all code that isn’t malware. There are mathematical, graphical, engineering and a whole range of other functions that normal developers will use - including WinAPI functions. That is why junk code is not to be underestimated nor why the restructuring of shellcode in memory can be effective rather than merely preventing the model from understanding what it is doing.

Part 1.D Strings Obfuscation

While many malware developers will use some very smart approaches to hide what they are doing from EDR including; compile time string-obfuscation, control flow flattening, randomising syscall function names, whether using something like this, or AsStrongAsFuck, or any number of C# nuget packages, I disagree with these approaches unless you’re sure that Reverse Engineers are going to be looking at your implants — in the commercial space this is not a worry for us, but for Nation States where attribution is important; it is relevant. But then again foreign language code comments, (Russian?) timezone beaconing times and other methods may prove better again than code implementation to put off analysts.

A very quick approach is to take an existing application. Simply type into Google “C# Gui app Github”, and download a solution, then embed your malicious code into new classes inside the applications and divert the flow of the main function to your own functions. Then rename for example NTCreateThreadEx, to something similar already inside the actual application that you are embedding into.

I will not go into this in more detail now, but, what would stop you from running strings on the actual Microsoft Teams binary (for example in the past when powrprof.dll is used with Teams for proxying)[NOTE: When in the past I have read about ManagedExports and attempting to export DllMain I came across this post by Mandiant, by Evan Pena, Ruben Boonen, Brett Hawkins, around abusing DLL misconfigurations which seems to confirm my hypothesis relating to string/code analysis] and therefore using the same strings inside the actual DLL itself to name your functions. How many legitimate binaries in the wild have randomly generated function names? Not many — we want to fit in, not stick out. Furthermore what stops you from generating a Macaronic language based off, of the naming conventions of all the official code within Windows OS?

I know this part of my hypothesis works because I was able in an afternoon’s work at KPMG to develop an implant generator on first compile that bypassed Cylance and Carbon Black (it did require further debugging later with my team mate Mike to map syscalls to other OS versions[in 2021]). Defender freemium also failed to analyse it, but ATP E5 did because of some mistakes I made and took me a little longer to hypothesise and experiment as to what ATP was doing.

Let’s think back to what I believe the ML is doing; because the ML model is not just looking at your binary. ATP is at once (using Cloud Submission) looking at the entire Windows estate, and if your binary looks more like other binaries that are not malware then when (what I hypothesise) the hierarchical clustering is performed then your binary is going to blend in, rather than be correlated with malware.

Part 1.E File Analysis

File analysis is done to look at the various sections of a PE file, whether it has code signing and looking at file size to evaluate the binary — I’m sure it looks at other areas also and in our GAN adventure we will be reporting what exactly what it looks at.

I won't belabour this one too much, but there are some great resources on the PE file format, on how to spoof code signing and similar but unless you work for an intelligence agency — good luck making sure that this part of your binary is right (this is addressed below).

It would be my bet, given Microsoft working closely with the US government, that they (NSA etc) can borrow code signing certs, so us in the commercial space will have to make do with doing less. You can visit this post, where is presented a list of certificate authorities you can buy a valid code signing certificate from. You will need to incorporate a company to do so.

Throughout the development of our GAN posts we will present in detail what we have managed to coax out of ATP for exactly what it is looking for.

Part 1.F File Evasion

While we could discuss the relative merits of using LLVM-Obfuscation (good post here ) if you are programming in C or C++ or even Nim, or alternatively to discuss managed vs unmanaged code in C#, when keeping hierarchical clustering in mind, I believe these techniques are not serving your best interests and going for managed code is in my opinion your best bet — if of course your requirements allow.

Allowing the ML models to inspect every element of your code is what going to serve you better, at least in our GAN that's what we are doing, and using a programming language that allows for embeding Resources is even better - which is why I typically favour .NET.

Part 2. Dynamic Analysis

Next up is a brief overview of dynamic analysis. This aspect is especially relevant to the initial access vector, when getting your implant in and first stage code execution. There are some extremely interesting cases where without the need for raw technical skill you can put off the K-means EDR algorithm by doing things slightly differently and cases where hiding/encryption is merited.

Part 2.A Hooking & Kernel Callbacks

As anyone who has worked in malware development knows typically EDRs without access to the kernel will hook into your processes and reroute the functions to study them further. So much research has been done on this area that I don’t think it will be relevant in a few year’s time because ATP is monitoring the kernel (which no other EDR has access to). MDSec released a fantastic blog post some time ago around Bypassing Image Load Kernel callbacks so the race to the low level dark corners of the OS will no doubt continue, but Microsoft can see everything eventually.

Part 2.B Event Tracing

Event tracing is another area that is well documented by other starting with Countercept (here) and a blog post by MDSec on hiding your .NET. So I will not go to lengths to repeat what they have said here.

Part 2.C Time Correlation

Next up is time correlation. Clearly the K-means model and cluster I showed above correlates datapoints - remember I have made the assumption that the bottom axis is time.

So, having multiple suspicious events taking place in quick succession is likly to be clustered together as malicious events irrespective of whether they are in the same process space, thread or not. This is always handy to keep top of mind.

Part 2.F Memory Analysis

Shellcode is merely code - obviously.

Like all code, shellcode in memory gets passed through the interpretation & correlation process performed by the APT ML model where potential hierarchical clustering is done. This can be approached in a similar fashion to static analysis evasion. Rather than encrypt, we focus on modifying the shellcode itself to blend in.

Atlan is redeveloping MovFuscator to enable x86-64 .NET compilation to better understand the work we need to do for this. Later we will focus on how we can apply the same methodologies to adapting in memory shellcode in a similar way to approaching static analysis. Stay tuned.

PART 3: Bringing onto our next step: GAN

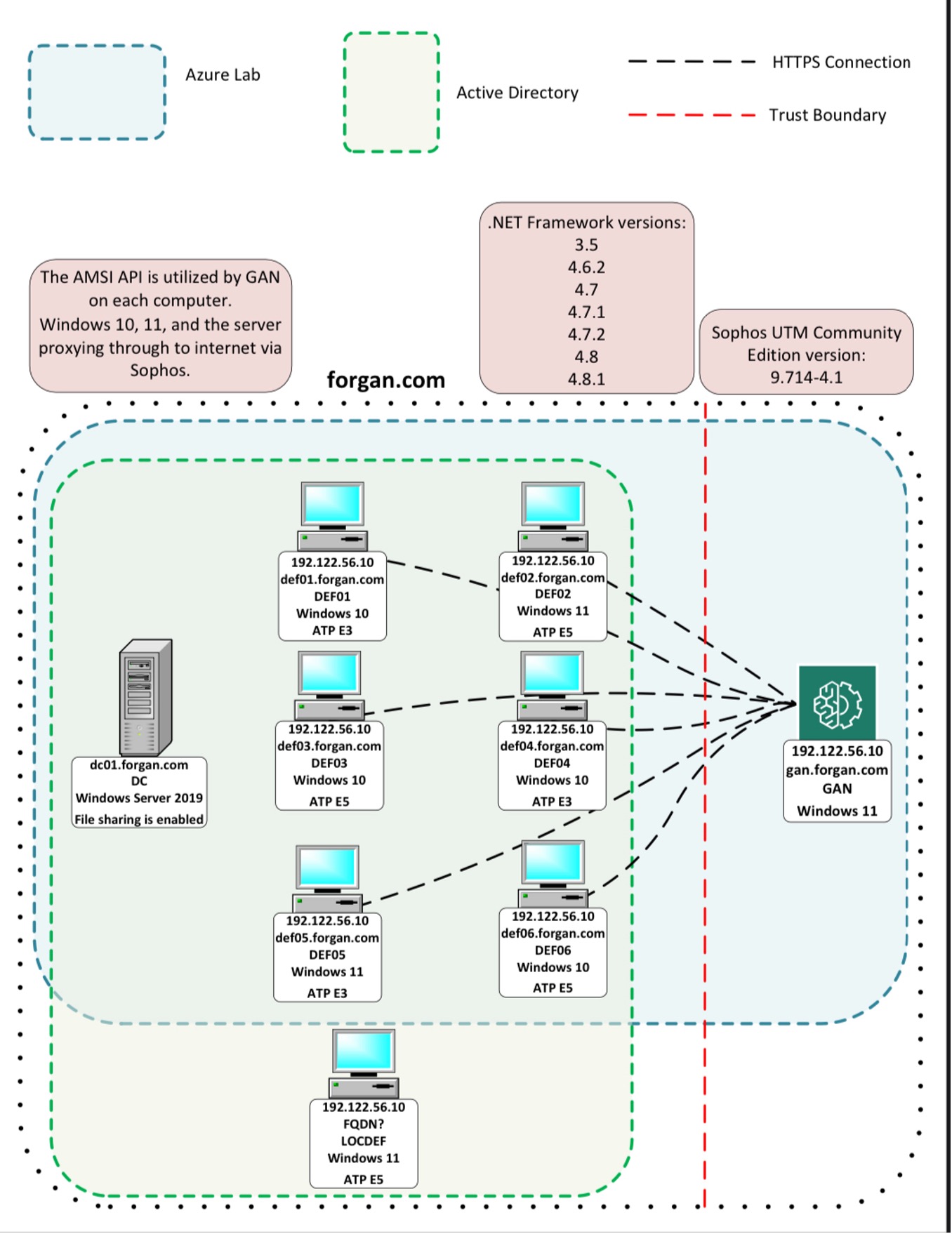

In a series of blog posts to be released in the next four months we will be highlighting how we built the lab (an earlier draft topology for your perusal in the image below) to systematically reverse engineer the model behind APT, represent the different elements of features of malware mathematically, and to subsequently generate an automated Malware Generative Adversarial Network to produce malware that evades Microsoft's ATP 100% of the time:

Remember ATP needs to be 100% effective to stop us, we only need to get one past it and we can continuously retrain it.

Given that you can repeat this, with ATP acting as the discriminator, we expect this approach to fairly comprehensibly defeat APT models in a practical way.

Read part 2 here

Training

Machine Learning for Red Teams

Hands-on training on ML foundations, clustering, classification, and model abuse for operators.